Steepest Descent Method

The Steepest Descent (also known as Gradient Descent) method is one of the simplest \(1^{st} \) order optimization algorithms. In order to find the optimal solution of a function, this method calculates the first derivative of the function.

1. Method

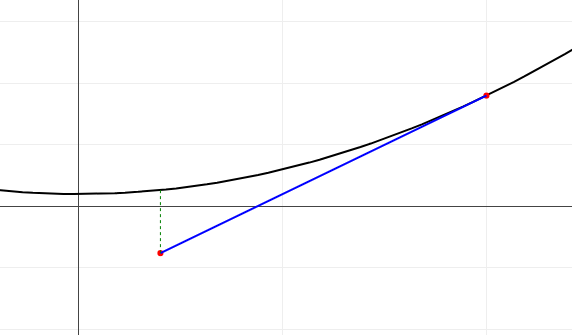

Consider a function \(f: \mathbb{R}^n \to \mathbb{R}\) that is differentiable, and let \(x_0\) be the initial guess. The steepest descent method iteratively updates the prior point. For the \(1^{st}\) iteration the update is done using the formula:

\[ x_{1} = x_0 + t_0 \ d_0 \]where \(t_0\) is the step size (or learning rate) and \( d_0 = -\nabla f(x_0) \) is the descent direction, which is negative of the gradient of the function.

For \((k+1)^{th}\) iteration, the update rule becomes: \[ x_{k+1} = x_{k} + t_k \ d_k \] where \(t_k\) and \(d_k\) are the step size and descent direction at iteration \(k\), respectively. The descent direction \( d_k \) is given by : \( d_k = -\nabla f(x_k)\). Note, all the optimization algorithms differ in the way they calculate the step length and descent direction.

The iterative process continues until predefined convergence criteria is met. Typical convergence criteria include: If change in function values between iterations is less than a predefined threshold \(\varepsilon\), change in the input value between iterations is small, or if the gradient is close to zero, i.e., \(|\nabla f(x_k)| < \varepsilon\).

The step size \(t_k\) can be determined using various methods, including:

- Constant step size

- Armijo Rule

- Exact line search

1.2 Line search method

The step length \(t_k\) is determined using a line search method, which aims to find the optimal step size that minimizes the function along the direction of the gradient.

The most common line search methods include:

Constant step length

In this method, a fixed step length is used for all iterations. This is simple but may not be optimal for all iterations.

Armijo rule

The Armijo rule is a backtracking line search method that adjusts the step length based on the decrease in the function value. It ensures that the step length is not too large, which could lead to overshooting the minimum.

Exact line search

The exact line search method finds the optimal step length by minimizing the function along the direction of the gradient. This is computationally expensive but can lead to faster convergence.

The choice of line search method can significantly affect the convergence rate of the steepest descent method.

2. Algorithm

The algorithm for the steepest descent method can be summarized as follows:

- Initialize \(x_0\) and set a tolerance level \(\varepsilon\).

- Compute the gradient \(\nabla f(x_k)\).

- Compute the descent direction \(d_k = - \nabla f(x_k)\).

- Determine the step length \(t_k\) using method of choice (constant step size, Armijo rule, or exact line search)

- Update the point: \(x_{k+1} = x_k + t_k \ d_k\).

- Check for convergence:

- If \(|\nabla f(x_k)| < \varepsilon\), exit the loop

- Otherwise \(k = k + 1\), go to step 2.

3. Example

Consider the function \(f(x) = x^2 + 2x + 1\). The gradient is given by \(\nabla f(x) = 2x + 2\). Starting from an initial guess \(x_1 = 4\), we can apply the steepest descent method with a constant step size of \(t = 0.1\). The iterations would look like this: \[ \text{Iteration 1 : } x_1 = x_0 - t \cdot \nabla f(x_0) = 4 - 0.1 \cdot (2 \cdot 4 + 2) = 3 \] \[ \text{Iteration 2 : } x_2 = x_1 - t \cdot \nabla f(x_1) = 3 - 0.1 \cdot (2 \cdot 3 + 2) = 2.2 \] and iterative process continues, until convergence condition, \(|f(x_{k+1}) - f(x_k)| < \varepsilon\) or, \(|x_{k+1} - x_k| < \varepsilon\) or \(|\nabla f(x_k)| < \varepsilon\), is met.

4. Discussion

The steepest descent method is a simple and effective 1st derivative optimization algorithm. For differentiable functions, it always converges to a local minimum under appropriate choice of step length. However, the choice of step length and line search method can significantly affect the convergence rate.